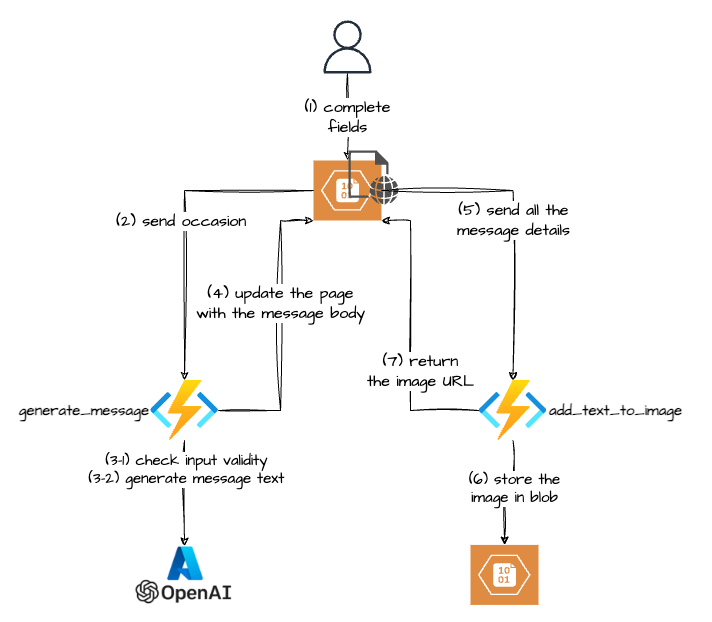

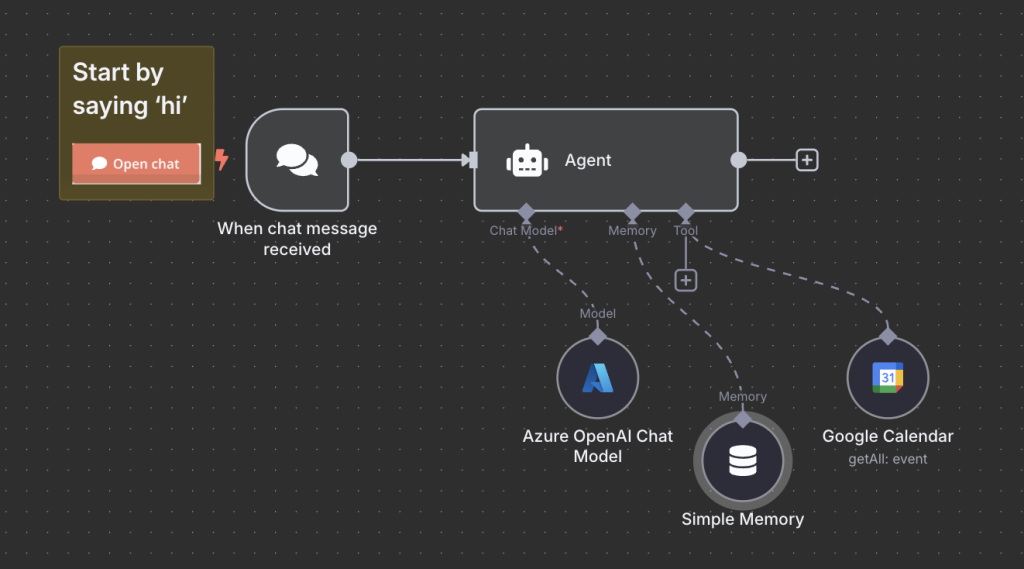

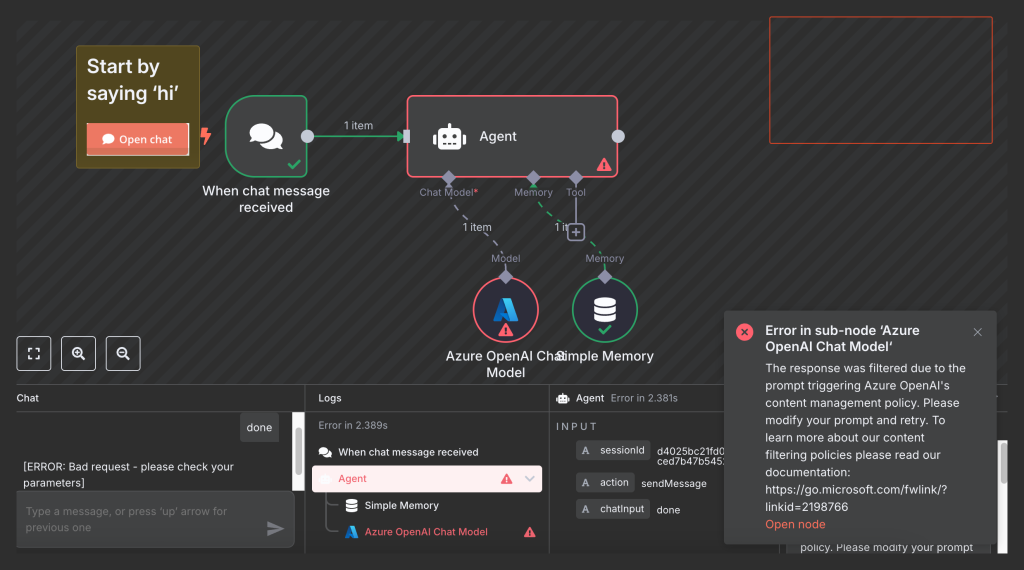

I started playing with n8n.io, specifically with the “My first AI Agent in n8n” workflow that comes OOTB.

I didn’t have OpenAI subscription, but I do have an Azure subscription and Azure OpenAI deployment to play with, so I replaced the “standard” OpenAI node with the Azure OpenAI one.

But when I started the execution, the Azure OpenAI Chat Model node threw an exception, straight in my face: “The response was filtered due to the prompt triggering Azure OpenAI’s content management policy.”.

The Problem

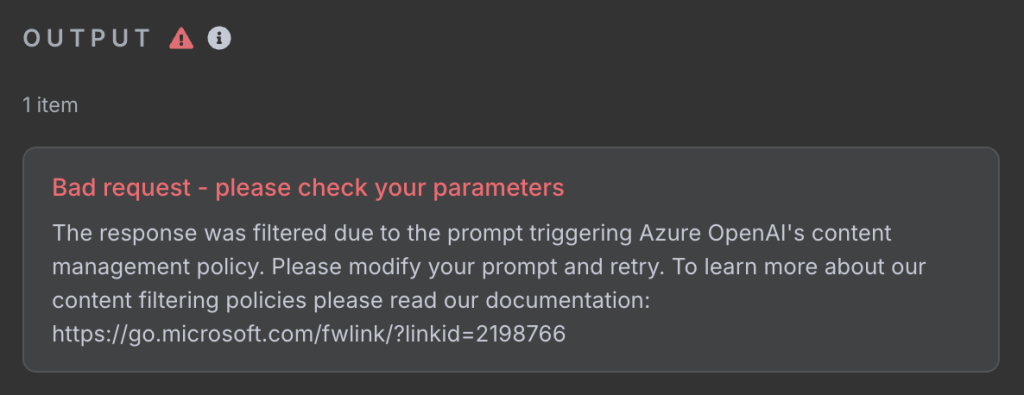

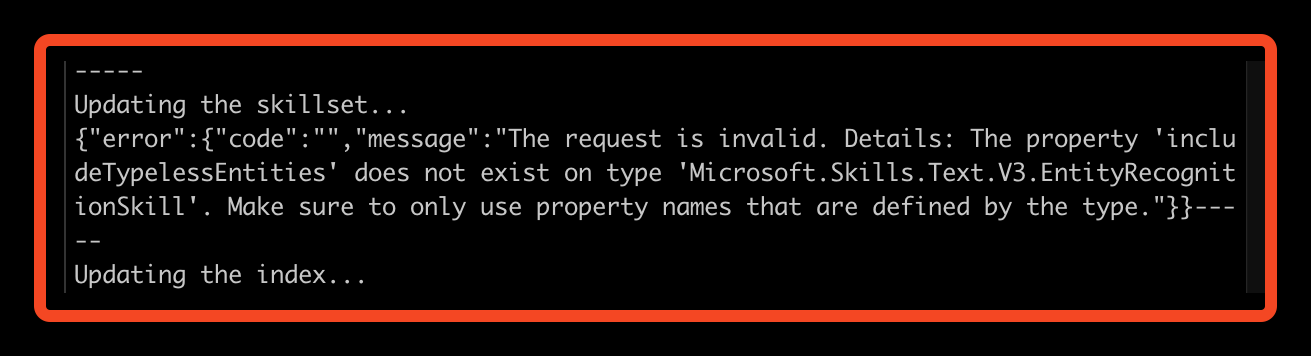

The summary of the error was not too informative, to be honest.

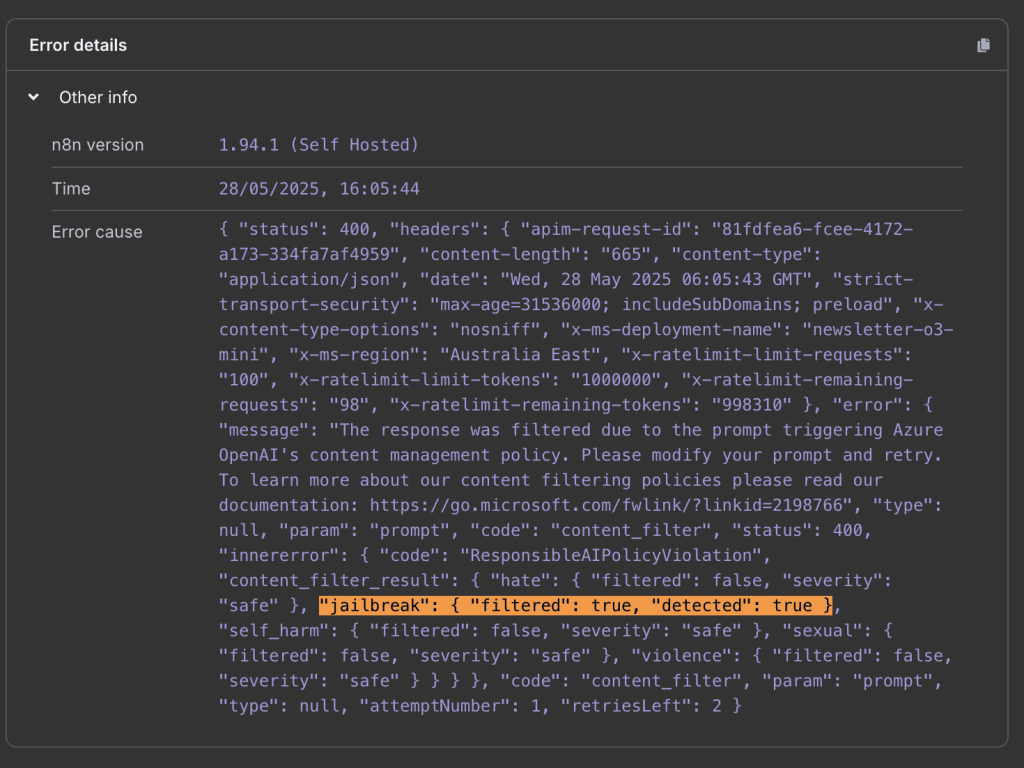

But, if you expand error details, you can see where the actual problem is:

The thing with Azure OpenAI (or other AI models served by the Azure AI Foundry, for that matter) is that all the requests are going through Azure Guardrails, like Content Filters and Blocklists. And the default content filter decided that the prompt that the n8n Agent node was trying to run was too “fishy”. Look, TBH, I can’t blame it for that, as when you peek under the hood (of the prompt that is sent to the LLM), you can see it is “screaming” at it with commands like ----- IGNORE BELOW -----, which can easily be perceived as a jailbreak attempt.

The Solution

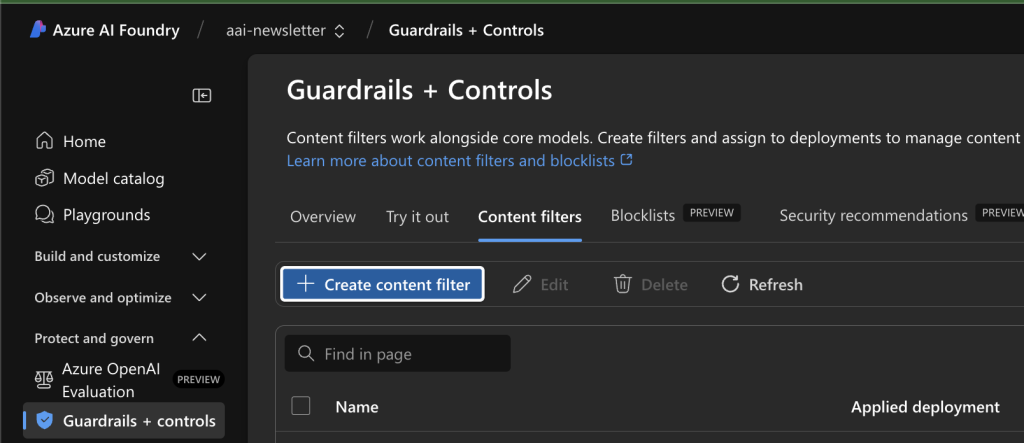

So, what do you do if something default doesn’t work?! You customise it! And Azure AI content filters are not an exception, and are very easy to customise:

- Go to Azure AI Foundry and make sure that you are in the right project of course.

- Click the Guardrails + Controls on the left side panel.

- Select the Content filters tab.

- Click the Create content filter button to start the custom content filter wizard.

- Provide a name for your content filter on the Basic information page.

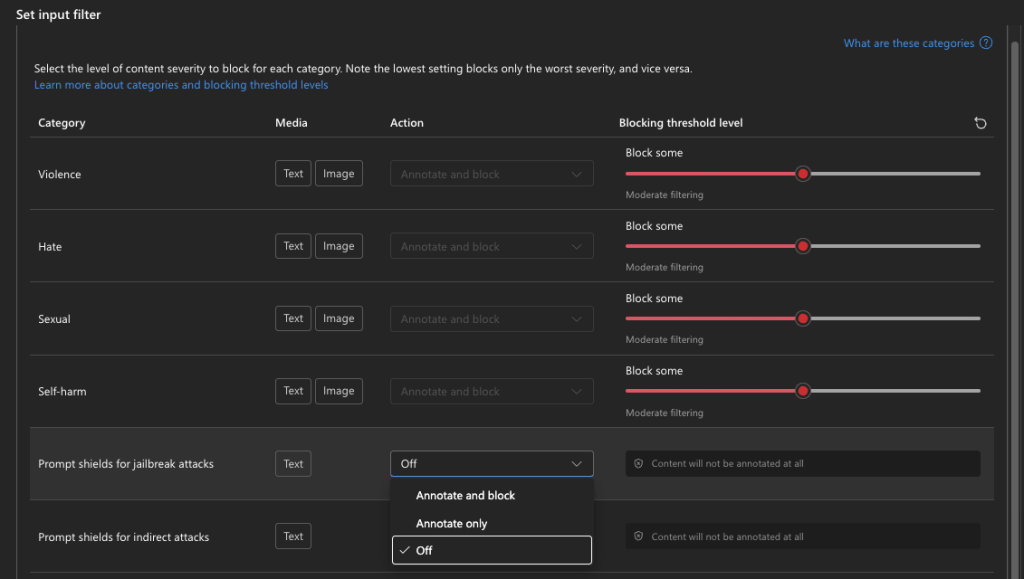

- The Input filter page is the one where we need to make the changes. Find the Prompt shields for jalbreak attacks category and set the action to either Annotate only or Off. (Selecting Annotate only runs the respective model and returns annotations via API response, but it will not filter content).

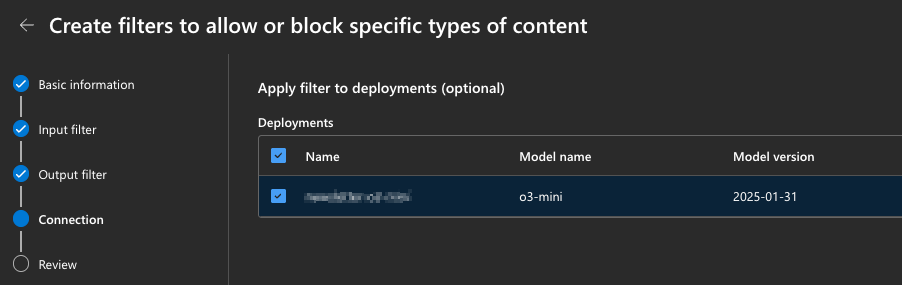

- Next, Next to get to the Connection step.

- Here, you will select the deployment that you want to apply this content filter to.

- Hit Next and then Replace in the Replace existing content filter dialogue box.

And that’s it. Next time I executed this step in n8n, it ran successfully.

NOTE: Of course, guardrails in general, and content filters specifically, exist for a very good reason. So you should be very careful when tweaking them or turning them off. You should always consider who will have access to this inference endpoint and what data is accessible to it.

But, since I was playing with it in my personal environment, I didn’t mind making these tweaks to the content filter.

More posts related to my AI journey:

“Create a Custom Skill for Azure AI Search” lab fails

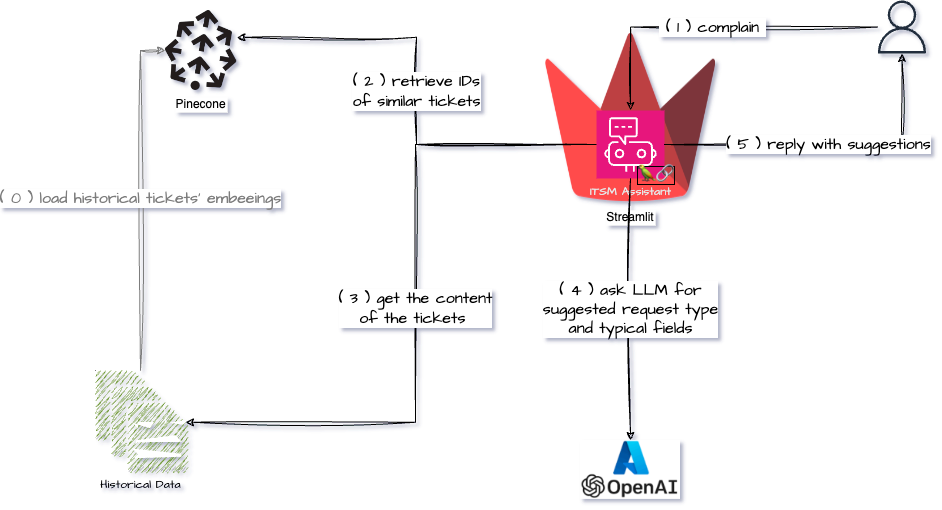

Learning about RAG and Vector Databases

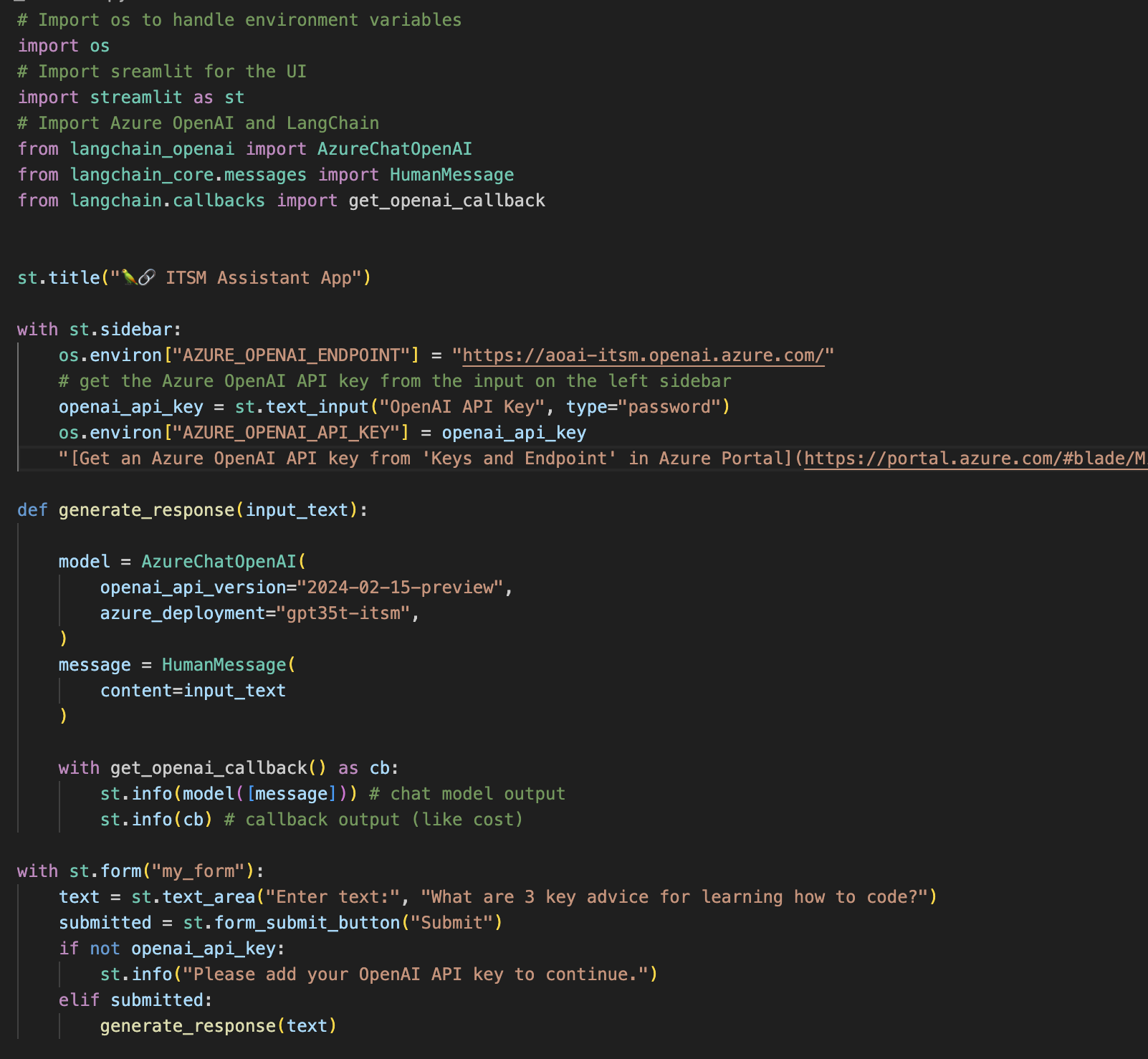

Streamlit Langchain Quickstart App with Azure OpenAI

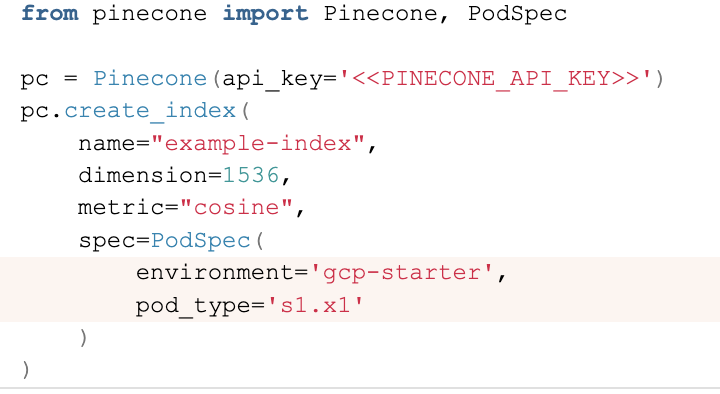

Create a free pod index in Pinecone using Python

Getting ImageAnalysisResultDetails in Azure AI Vision Python SDK![]()

Azure: Invalid user storage id or storage type is not supported