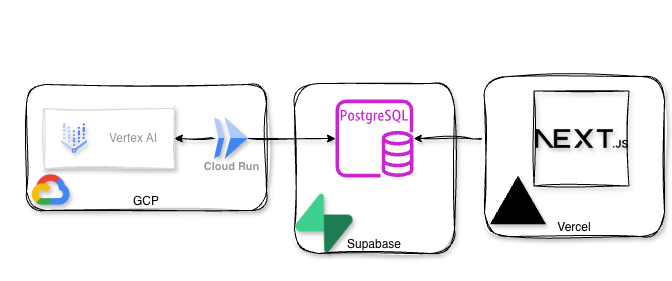

I have a little project I’m working on playing with, MentionVault.com. It’s a platform that allows you to look for guests on various podcasts and what was mentioned in each episode. So I was thinking, I can’t be that shoeless cobbler, how come I have an application and don’t have any Observability for it?! That’s how I decided to try a Splunk O11y deployment for my app.